Introduction

At Qubole, many of our customers run their Hadoop clusters on AWS EC2 instances. Each of these instances is a Linux guest on a Xen hypervisor. Traditionally each guest’s network traffic goes through the hypervisor, which adds a little bit of overhead to the bandwidth. EC2 now supports Single Root I/O Virtualization (called Enhanced Networking in AWS terminology) on some of the new instance types, wherein each physical ethernet NIC virtualizes itself as multiple independent PCIe Ethernet NICs, each of which can be assigned to a Xen guest.

Many resources on the web (such as this) have documented improvements in bandwidth and latency when using enhanced networking. This was mentioned by our helpful partner engineers at AWS as another one of the low-hanging fruits that we should investigate. Hadoop is very network I/O intensive – large amounts of data are transferred between nodes as well as between S3 and the nodes – hence it stands to reason that Hadoop workloads would benefit from this change. However – as someone once said, following recommendations based on someone else’s benchmarks is like taking someone else’s prescribed medicine – so we set out to benchmark our Hadoop offering against instances with Enhanced Networking. This post describes data obtained from this study.

Benchmark Setup

Enabling enhanced networking required us to make the following changes to our machines:

- Upgrade the Linux kernel to 3.14 from 2.6.

- Use HVM AMIs for all supported instance types, since enhanced networking is only supported on the HVM AMIs

We designed our test setup to understand the performance difference due to each of these factors: the kernel upgrade, movement from PVM to HVM AMIs, and using Enhanced Networking itself.

- We used the traditional Hadoop Terasort and testDFSIO benchmarks on clusters ranging in size from 5-20 nodes. Qubole also offers Apache Spark and Presto as a Service – and ideally, we would have liked to benchmark them against these upgrades as well (and hopefully will be able to do so in the future).

- There have been some improvements in the KVM and Xen hypervisor stacks in recent versions of the Linux kernel. To exclude performance improvements due to these changes, we tested the PV AMI with both the 2.6 and 3.14 kernels.

- HVM AMIs are supposed to be more performant than PV AMIs in general, and to exclude the possibility of this polluting the benchmarks, we tested PV against HVM AMIs (with both on the 3.14 kernel)

- Finally, we ran the benchmarks for clusters of similar configurations with and without Enhanced Networking enabled.

Thus we can say with reasonable confidence that the benchmarks we obtained for Enhanced Networking are exclusive of benefits obtained by the collateral changes that we made to support it. For brevity, not all the benchmarks between different instance types are listed here. Only a few are listed – sufficient to demonstrate the benefits of the upgrades.

Benchmark Results

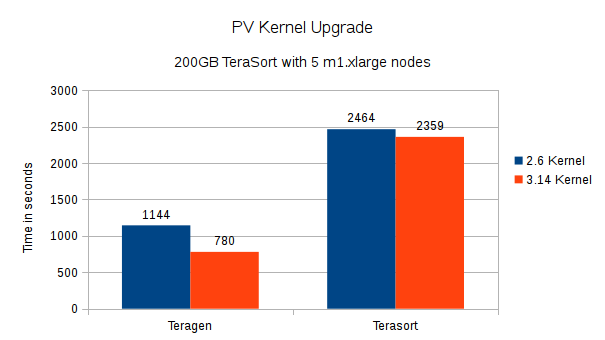

PV kernel upgrade

<!–

| Benchmark | 2.6 kernel (time in seconds) | 3.14 kernel (time in seconds) | Change (%) |

| Teragen | 1144 | 780 | -31.82% |

| Terasort | 2464 | 2359 | -4.26% |

–>

With the new 3.14 kernel, we saw ~32% improvement in TeraGen and ~5% improvement in TeraSort times.

| Benchmark | 2.6 kernel | 3.14 kernel | Change (%) | |

| testDFSIO Write | ||||

| Throughput MB/sec | 14.8215218279145 | 16.2628995286198 | 9.72% | |

| Average IO rate MB/sec | 15.4801158905029 | 16.434175491333 | 6.16% | |

| Time taken (s) | 901.817 | 725.172 | -19.59% | |

| testDFSIO Read | ||||

| Throughput MB/sec | 6202.12733 | 7170.514843 | 15.61% | |

| Average IO rate MB/sec | 7031.760742 | 8717.21875 | 23.97% | |

| Time taken (s) | 24.7 | 23.287 | -5.72% |

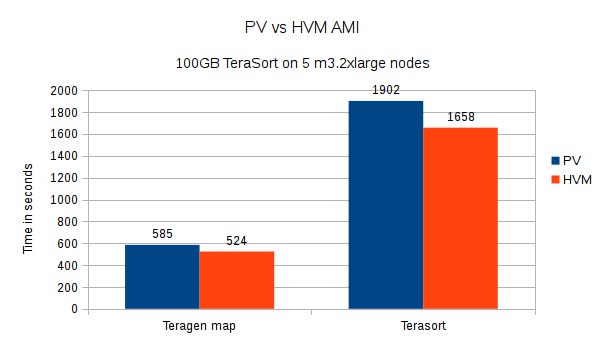

PV vs HVM virtualization

In these tests, both clusters were running instances with the new 3.14 Linux kernel. HVM AMIs gave us ~10.5% improvement in TeraGen and ~13% improvement in TeraSort times as compared to their PV counterparts.

<!–

| Benchmark | PV AMI (time in seconds) | HVM AMI (time in seconds) | Change (%) |

| Teragen | 585 | 524 | -10.43% |

| Terasort | 1902 | 1658 | -12.83% |

| Benchmark | PV AMI | HVM AMI | Change (%) | |

| testDFSIO Write | ||||

| Throughput MB/sec | 17.53389302 | 19.31770634 | 10.17% | |

| Average IO rate MB/sec | 18.33335686 | 20.04224205 | 9.32% | |

| Time taken (s) | 376.843 | 348.93 | -7.41% | |

| testDFSIO Read | ||||

| Throughput MB/sec | 3296.304842 | 3389.141192 | 2.82% | |

| Average IO rate MB/sec | 4763.108887 | 5134.731445 | 7.80% | |

| Time taken (s) | 22.513 | 20.492 | -8.98% |

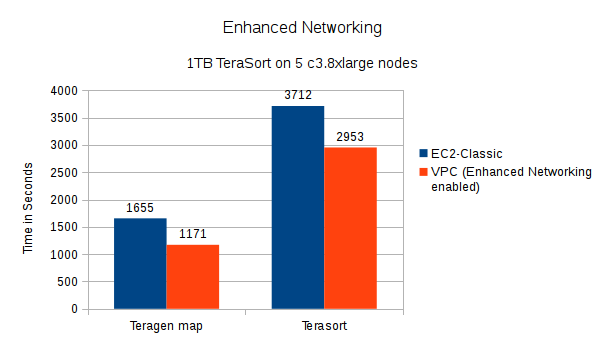

Enhanced networking

Enhanced networking only works in VPC environments, so these tests were performed by running one cluster in a VPC, and another in EC2 classic. Also in our testing, we found that the maximum benefit of this feature was obtained in instances that support 10Gb ethernet (c3.8xlarge, r3.8xlarge, and i2.8xlarge). Hence we are capturing the benchmark results against c3.8xlarge instance types here:

<!–

| Benchmark | Cluster in EC2-Classic (time in seconds) | Cluster in VPC (time in seconds) | Change (%) |

| Teragen | 1655 | 1171 | -29.24% |

| Terasort | 3712 | 2953 | -20.45% |

–>

We found that Enhanced Networking gave us an impressive ~29.2% improvement in TeraGen and ~20.45% reduction in TeraSort times.

| Benchmark | Cluster in EC2-Classic | Cluster in VPC | Change (%) | |

| testDFSIO Write | ||||

| Throughput MB/sec | 11.3076844 | 26.64146881 | 135.60% | |

| Average IO rate MB/sec | 14.81562042 | 26.99263 | 82.19% | |

| Time taken (s) | 562.802 | 152.548 | -72.89% | |

| testDFSIO Read | ||||

| Throughput MB/sec | 2086.854901 | 4705.882353 | 125.50% | |

| Average IO rate MB/sec | 3664.107422 | 5546.874023 | 51.38% | |

| Time taken (s) | 26.447 | 18.444 | -30.26% |

Conclusion

Based on this study we concluded that:

- The upgraded Linux kernel benefits both PV and HVM AMIs. We have, therefore, upgraded the kernel on all our AMIs.

- Where supported, the HVM AMI is more performant than the PV AMI for Hadoop workloads. We now use the HVM AMI for m3 and c3-type instances as well. These were earlier using PV AMIs.

- Enhanced networking offers significant performance benefits for Hadoop workloads on some supported instance types

In other cases – the benchmarks indicated that there were no significant performance improvements:

- Enhanced networking on instance types that do not support 10Gb ethernet. On these, the improvement in TeraGen/Sort was not significant. Enhanced networking is enabled on these instance types as well, so it is possible that your particular workload may see some benefits.

- Placement groups – although placement groups are supposed to improve network performance further by offering full-bisection bandwidth and low-latency connections between instances, we did not observe significant benefits to using this over and above-enhanced networking. One possible reason for this could be that with smaller cluster sizes, the probability of the nodes being co-located is pretty high anyway.

Enhanced Networking upgrades in QDS

For users of QDS – if you’re using one of the instance types supporting enhanced networking in a VPC for your cluster slaves – good news, you’re already using all these improvements in your Qubole cluster! Note that machine images for all cluster types (Hadoop, Spark, and Presto) have been upgraded. For others:

- if you’re using one of the older generation instance types that only support PV AMIs, you still get the benefit of the upgraded Linux kernel

- if you’re using an instance type that supports enhanced networking, but your cluster is not in a VPC, you can easily configure your cluster to run in a VPC to avail the benefits of enhanced networking.

- if you’d like to switch to a newer instance type to use enhanced networking, we recommend you test your workload (partially or completely) against a new cluster before you make the switch. Your Mileage May Vary with respect to the performance benefits.

If you need any help with making any of the above changes, please contact us and we’d be glad to help!