Guest authors: Jerry Xu, Co-founder, and CEO, Datatron; Lekhni Randive, Product Manager, Datatron

Qubole author: Jorge Villamariona, Sr. Product Marketing Manager, Qubole

In today’s world, Machine Learning (ML) models are driving growth for enterprises more than ever. Big enterprises are moving towards ML for reducing costs, generating customer insights, and improving customer experience. ML use cases vary according to the industry, for instance, financial services are more concerned about detecting fraud, while in entertainment, providers want to offer the best content for subscribers to watch. But, the underlying motives and underlying technologies for these ML models essentially remain the same and all enterprises face the same kind of problems as discussed below.

The Datatron founders encountered these real-world problems when they were working on Lyft’s surge pricing algorithms back in 2015 and 2016. It was extremely tedious for them as data scientists to deploy and manage those models and see them running with live traffic once in production. Jerry Xu, co-founder, and CEO explained that it used to take them nearly 6-8 months to make this happen. He further states that, since these problems occurred in a technically sophisticated company like Lyft, the problems can occur in other big enterprises that also have large teams of data scientists working on solving complex problems. These enterprises need to deploy their models to production as fast as possible and there are many factors responsible for the delay in the deployment of ML models into production.

Also, as we grow into the future, the number of ML models is set to increase rapidly as is the number of data scientists that work on these complex problems. Currently, according to a KDNuggets article, the shortage of data scientists in 2018 was 151,717 (in the US alone) with New York ranking the highest with 34,032, San Francisco with 31,798, followed by Los Angeles with 12,251. Given such a big demand for qualified professionals, proper management of ML-based models is a must-have. A scalable approach needs to be devised which keeps everyone on the same page and also helps organize ML models in a proper manner. All data related to these ML models including the model files and dataset files should be stored and managed in a system easily understandable by all users.

Let’s explore a few important factors (from an endless list of possibilities):

Continuous Catch Up between Data Scientists, Software Engineers, DevOps, and Management: In a traditional enterprise that wants to deploy ML models, there is a need for coordination between data scientists, software engineers, and DevOps. Data scientists are responsible for training, testing, and building models, but they are not responsible for the deployment of those models, even if they own them. Software engineers are responsible for integrating ML models into application code. DevOps deploys these models into production. Also, to deploy code into production, management approval is typically required, and managers, as well as senior engineers, are all involved in this process. The complete process may be too onerous in terms of getting a model deployed all the way to the production stage. This process may even take months. Getting a model deployed requires continuous communication and multiple meetings, which means that this process is not scalable when hundreds of ML models need to be deployed and maintained by the enterprise.

Machine Learning Model Versioning: If the same model is worked upon via the addition of new features into the feature vector list, then there is a new model developed. What if in the future one needs to go back to an older version of the model for this use case? Should you redo the same work again, or you would maintain a history of different versions of the model internally with you? Tracking such version changes for multiple ML models becomes extremely relevant and important, regardless of the number of data scientists in the company. There needs to be an easy-to-use hosting solution. This solution should host metadata for every model that data scientists have worked on. The hosting solution should also include different versions of the ML Model developed in time along with the location where they are stored.

Associating Datasets and ML Models including Versioning and Timestamps: ML models and datasets change over time. This means that there is new data arriving as time progresses. These datasets also need to be tracked for models to be trained on. Describing and associating datasets and models should be easy and accurate. The datasets should be versioned, and the models trained on each dataset should be part of the record. The same holds true for feature vector versioning. This closed-loop of versioning and presenting this as metadata will not only help all users understand these associations, it will ultimately help users better leverage these ML models. Presenting information in a nice and easy-to-understand manner represents significant time savings for data scientists, software engineers, DevOps, and the management team. Email exchanges should be limited to filling the knowledge gap and all collaboration should happen on a common platform.

Model Deployment and Scoring at Scale and High Availability: Model deployment is defined as making ML models available as REST API endpoints for providing prediction functionality to any application that can call the API. Depending on the use case, the prediction logic may be needed online, in real-time, or offline. If the prediction logic is desired online, then multiple instances of ML models can be made available logically. It is important to keep these models highly available. Similarly, for offline models, users should be able to specify the ML model and dataset(s) on which scoring is desired. Having the model score on GBs or TBs of data should not be a concern for the user. It should also be easy to view the progress of jobs over time. The ability to perform these operations at scale for thousands of models is of paramount importance, and the system should not go down in time—in other words, it should be highly available.

ML Model Monitoring:

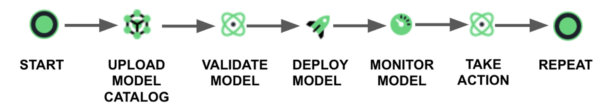

Data scientists work on datasets in a lab environment and these are mostly not live production datasets. For this reason, ML models often fail in production with live data. Being able to monitor ML models in production is essential and beneficial to data scientists. More important than monitoring is the ability to react to circumstances when an ML model is not behaving correctly. Just knowing that ML models are failing on live production data is a key requirement. But being able to respond to such scenarios is a step beyond what is currently available with existing systems. Such advanced monitoring takes organizations way ahead in terms of their ability to exploit ML with low operating costs. The complete ML creation, deployment, and monitoring process should look like this:

ML is progressing quickly, but the tooling behind ML is just as critical. Without the right tools at hand, the ML process will be long, tedious, and cumbersome. Companies’ ability to govern their ML models is quickly exceeding their infrastructure capabilities and the bandwidth of their engineering and data science teams. Building models isn’t the problem—creating the right infrastructure to support those models is. Datatron is addressing this need, by speeding up deployments, detecting problems early, and increasing the efficiency of managing multiple models at scale. Organizations should step up and start thinking about their ML initiatives. They should evaluate where they stand today and also assess the ML capabilities available in the market.

Click here to learn more about how Datatron helps organizations streamline the ML model lifecycle. Another important aspect of the ML model lifecycle is selecting a data platform to manage the technical and financial challenges of implementing these ML models. Qubole complements Datatron’s capabilities and it has helped countless customers overcome these challenges.