Authored by Mohan Krishnamurthy, Ph.D, Senior Product Manager, Qubole

Qubole’s Notebooks offer GUI to explore and interact with data for diverse groups of users including data scientists, data analysts, and data engineers. Beyond this interactivity, these Notebooks have evolved to become a Data IDE and plays a crucial role in productionizing your code–which is the next logical step after data exploration and model building.

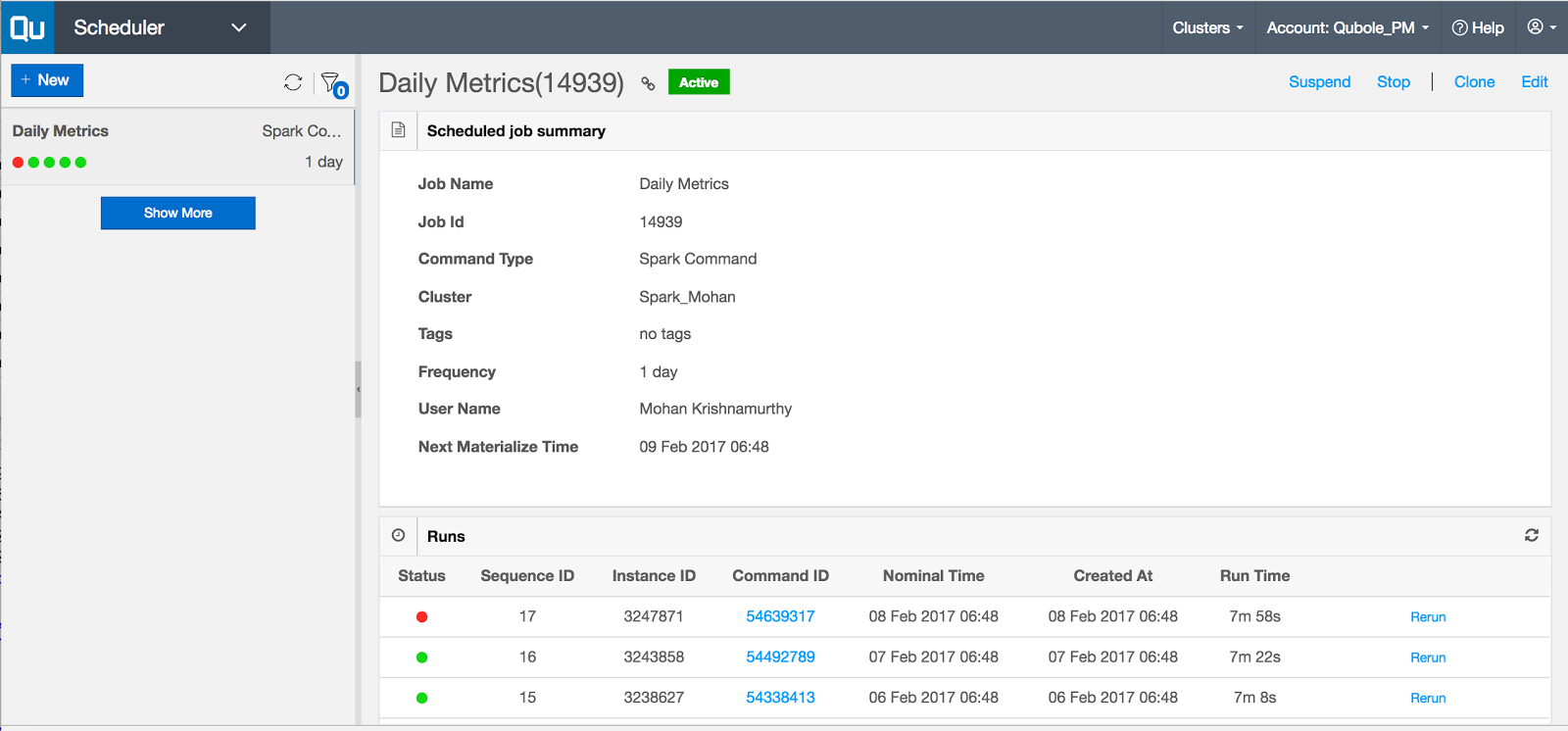

The recently launched Qubole Notebooks API allows you to programmatically access Notebooks created within Qubole Data Service (QDS) and, more importantly, it also enables you to run Notebooks as a scheduled job as part of your production data pipelines.

Scheduling Notebooks

This new feature eliminates a prior limitation with Notebooks’ cron scheduler—the need to have cluster associated with the Notebook to be running for it to execute code and queries.

In this Part 1 of 2 series, we’ve outlined quick and easy steps to schedule Notebooks using workflow management tools such as Scheduler, Airflow, etc. available in QDS.

Steps to Schedule Notebooks

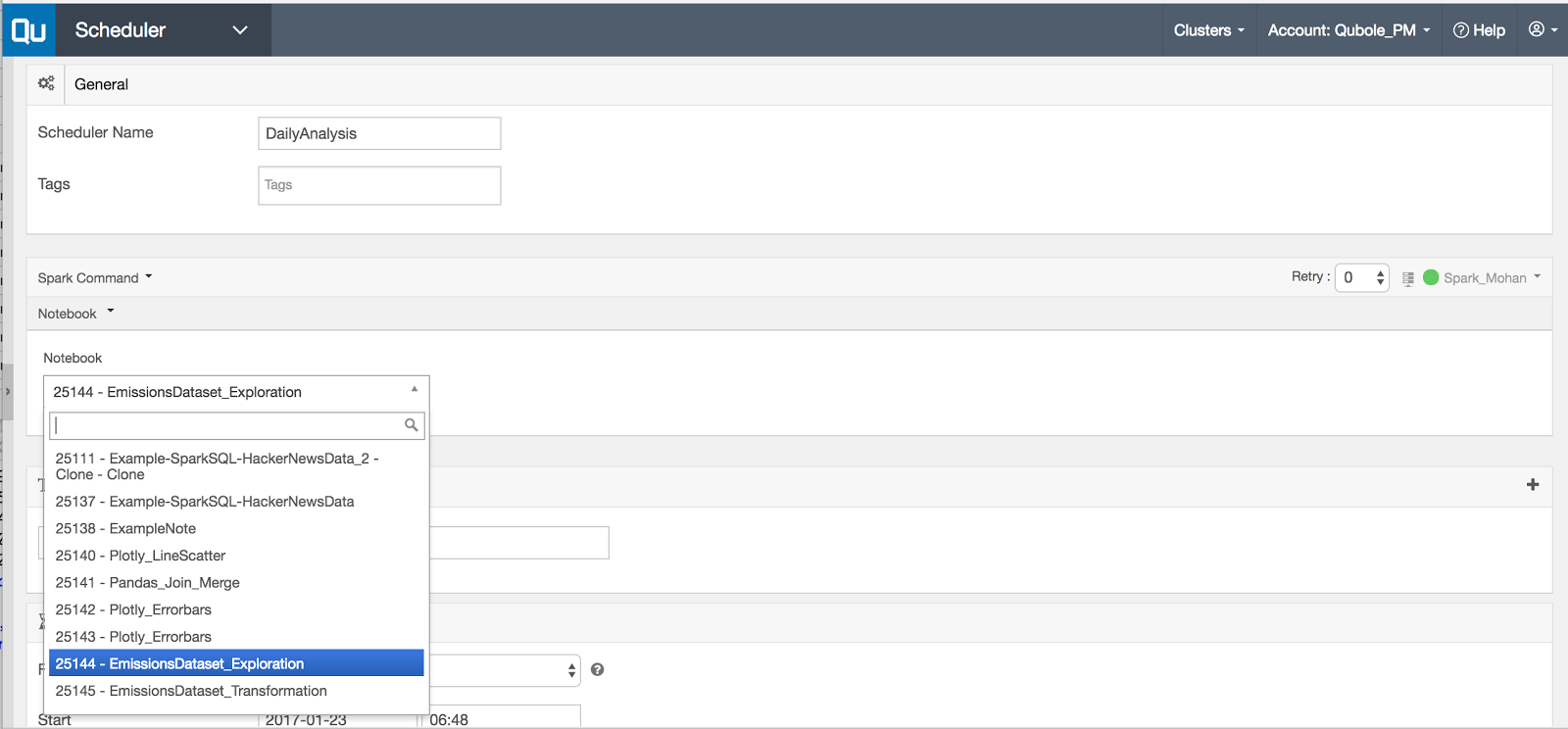

- Browser to the Scheduler interface

- Click on + New to create a new scheduled job

- Enter Scheduler Name

- In the next section, select Spark Command from the dropdown

- Select Notebook as Spark command from the dropdown (Default is Scala)

- Select a Notebook you’d like to schedule from the dropdown

- In the Schedule section, select Frequency and set Start, End and Time Zone attributes

- Click on Save

Stay Tuned!

In Part 2, we will discuss Notebook Workflows so stay tuned!

If you’re interested in QDS, sign up today for a free trial! To get an overview of QDS, click here to register for a live demo.