Co-authored by Yogesh Garg and Sumit Maheshwari, Members of the Technical Staff at Qubole. Sumit Maheshwari is also part of Apache Airflow PPMC.

We are excited to announce that Airflow as a Service on Qubole Data Service (QDS) is GA and joins the family of Hadoop 1, Hadoop 2, Spark, Presto, and HBase offered as a service on QDS. This means that data teams will now be able to really focus on creating dynamic, extensible, and scalable data pipelines while leveraging Qubole’s fully managed and automated cluster lifecycle management for Airflow clusters in the cloud. (More on Airflow on QDS below.)

What is Airflow?

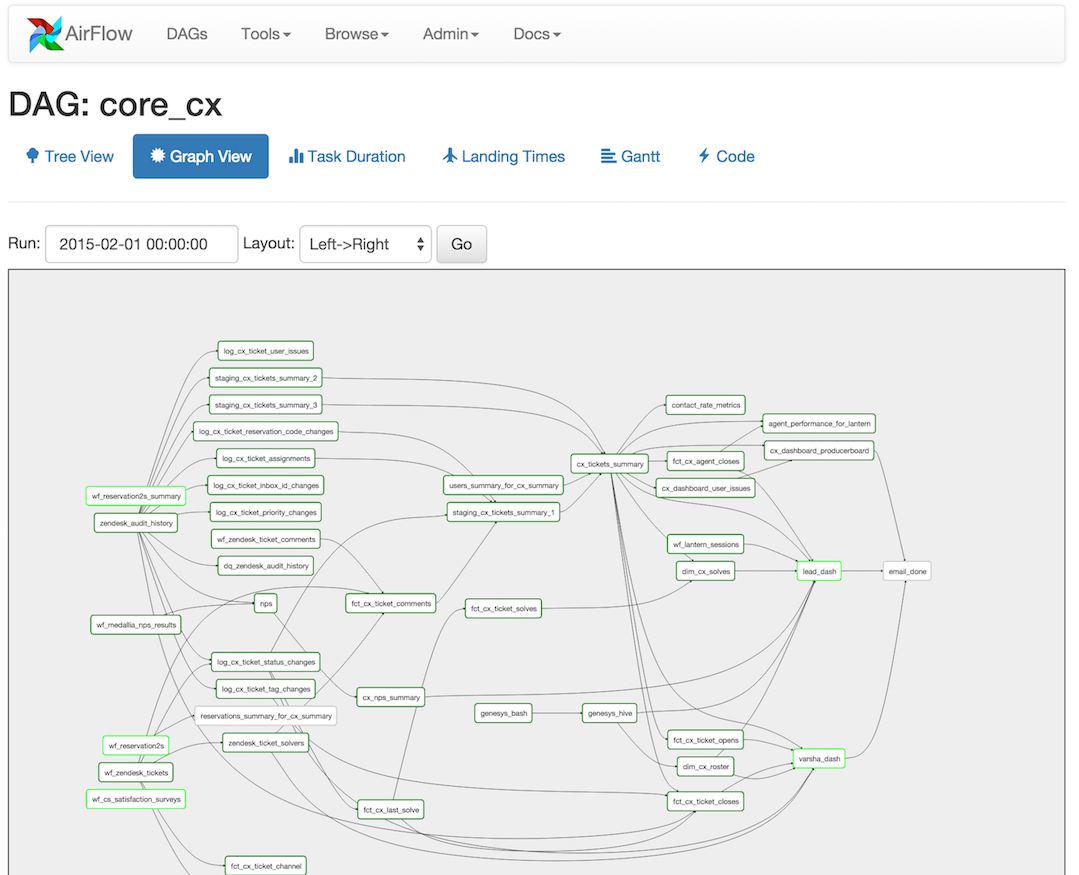

Airflow is a workflow management service built by Airbnb and is now part of Apache Incubator. It allows you to programmatically author, schedule, and monitor workflows. You can easily visualize your data pipelines’ dependencies, progress, logs, code, trigger tasks, and success status. You can also analyze where execution time is spent within the workflow as well as get a comprehensive view of the time of the day different tasks usually finish. The graphical UI is also suitable for administrative functions such as managing connections.

[Source: GitHub]

Airflow vs Oozie

One of the most distinguishing features of Airflow compared to Oozie is the representation of directed acyclic graphs (DAGs) of tasks. An Airflow DAG is represented in a Python script. This allows data engineers to represent complex workflows quite easily using an object-oriented paradigm and perform transformations over the intermediate data sets using rich Python utilities. This is a significant shift from the XML paradigm in Oozie. Operationally Oozie has been hard to maintain as well owing to DAG synchronizations between database and HDFS on cloud ephemeral storage.

Airflow on QDS

- Automated Cluster Lifecycle Management – Airflow clusters on QDS leverage Qubole’s automated cluster lifecycle management infrastructure currently in production and shared across Hadoop, Spark, Presto, and HBase clusters. As a result, you can take advantage of one-click cluster provisioning, specify Airflow-specific settings, configure clusters to launch securely within your VPCs and Subnets, and select from 40+ AWS instance types.

- Integration between QDS and Airflow – In our previous blog, we introduced Qubole Operator which allows you to tightly integrate Airflow with your data pipelines in QDS. Using this operator, you will be able to use Airflow for the creation and management of complex data pipelines while submitting jobs directly to QDS.

- Monitoring – You can monitor the status of your workflows via the Airflow webserver that is readily available upon launching Airflow clusters. In addition, Qubole also provides monitoring through Ganglia & Celery Dashboards.

- Reliability – We’ve extended the codebase to make it more reliable. So in the unlikely event that causes Airflow to become unresponsive, restarting the Airflow cluster will automatically bring Qubole Operator jobs back to their original state.

- Better User Experience – We’ve implemented a solution to cache assets that significantly reduces the loading time of the Airflow webserver. In addition, we have added the ability to easily navigate from the Airflow web interface to QDS. These enhancements are important because monitoring data pipelines is a crucial part of processing Big Data workloads.

- Security and Accessibility – All the actions on Airflow clusters go through existing roles, users, and groups based on security currently in production on QDS. This eliminates the need to implement other/custom ACL or security mechanisms to authorize access to your Airflow clusters and workflows.

For technical details on setup, configuration, deploying, and using Airflow as a service on QDS, please refer to our documentation.

In Summary

We would like to thank the Airflow Open Source community for building a fantastic platform and giving us the opportunity to be a part of their great growth story. We are also committed to providing contributions back to the community and keep improving the Airflow as a service for our customers.