We are constantly making additions to our Qubole Data Service (QDS) product to better meet the security, data governance, and compliance needs of our enterprise customers. In the past year, we’ve made significant improvements around network architecture, data encryption options, and internal operations.

In this blog post, we’ve outlined some of the options in QDS that you can start leveraging today.

Network Architecture

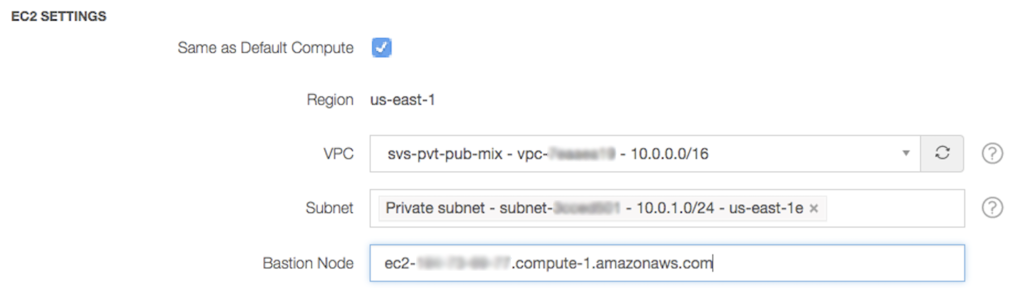

We now support Hadoop, Spark, and Presto clusters in Amazon VPC private subnets. In AWS, the use of a Virtual Private Cloud (VPC) is increasingly gaining popularity, particularly when combined with private subnets. Clusters brought up in a private subnet are not reachable from the public internet. These clusters are thus more secure and less vulnerable to potential attacks.

We have a walkthrough for setting up a cluster within private subnets in our documentation. We have simplified the process so that all the settings, including the selection of the VPC, the private subnet, and the bastion host, can be entered directly in the Advanced Configuration portion when creating or editing a cluster. Many of our large enterprise customers have already started using VPC private subnets with their QDS clusters – you can try it out today!

Encryption Options

There are three major areas where data encryption can be important:

- Data in transit

- Data in the object store

- Temporary data in HDFS

Encryption for temporary data in HDFS is already available via a simple checkbox in the cluster settings, as described here. Below are some of the new encryption options we have around data in transit and data in the object store.

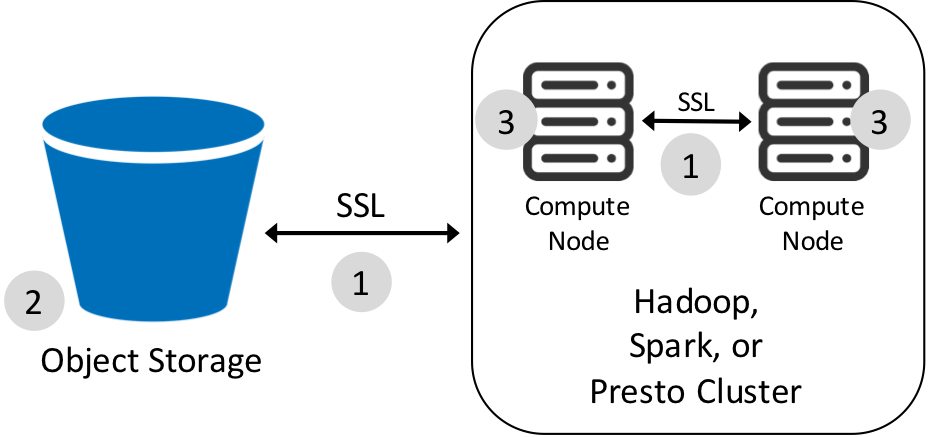

Data in Transit

We recently added support for Simple Authentication and Security Layer (SASL) for data in transit within Spark Clusters. These settings can be enabled easily as a Spark setting within the cluster settings. We’ve also added early access for customers interested in SSL for data in transit for Presto clusters.

Please note that encrypting data in transit can have an effect on performance. For these reasons, we leave these as options and they are not enabled by default. Security-conscious customers or those that need to meet compliance requirements (such as HIPAA) can still secure their clusters via these methods.

Encryption in the Object Store

The object store (such as Amazon S3, Azure Blob Store) usually serves as the primary source of truth for data for our customers. Encryption for data at rest is generally up to the cloud provider. QDS provides the hooks to integrate with the encryption options provided in the object store itself.

For Amazon S3, QDS has always supported server side encryption. In this option, S3 performs the encryption on writes and the decryption on reads. The encryption key is stored within the S3 service. Our customers also wanted an option to manage the encryption key itself. As a result, we recently added support for client-side encryption via integration with the AWS Key Management Service (KMS).

In this model, the customer delegates a key from the KMS that a QDS Hadoop or Spark cluster will use when reading/writing data. This gives the customer more control in managing and rotating the encryption key. It also provides the same simplicity as before, since encryption and decryption are done automatically by the QDS cluster. Client-side encryption with AWS KMS is available for early access. You can read more in our documentation.

Data Governance

QDS has always had a variety of options for application-level governance. Customers can create custom roles and group users into these roles to control access around editing cluster settings, running specific command types, and other areas of the product.

Recently, we also added support for Hive Authorization for data-level governance. Hive Authorization can be used to grant and revoke access to Hive metastore tables at a user level. This can be valuable when you want to segment your users based on their intended level of access to data. For example, if you have your entire data lake accessible in your QDS account, you may want to restrict certain tables to users due to security or compliance reasons.

Hive Authorization is currently supported for Hive and Presto, with support for Spark SQL coming soon. Together with QDS’s ability to granularly set access permissions on command types, you can define a SQL user that has access only to Hive and Presto commands and further use Hive Authorization to control their data access.

Compliance

We recently announced the successful completion of SOC 2 Type II. In addition, we have added new options around data residency and account settings.

QDS in AWS Germany (Frankfurt) Region

For customers with EU data residency compliance requirements, we now have a dedicated, isolated tier of our product hosted entirely within the AWS Germany (Frankfurt) region. This tier can be accessed at eu-central-1.qubole.com and requires a new QDS account to be created. Other than guaranteeing data residency within the EU, QDS works exactly the same way. You can read more about this tier in our documentation.

HTTPS for all REST API calls and Account Timeout Settings

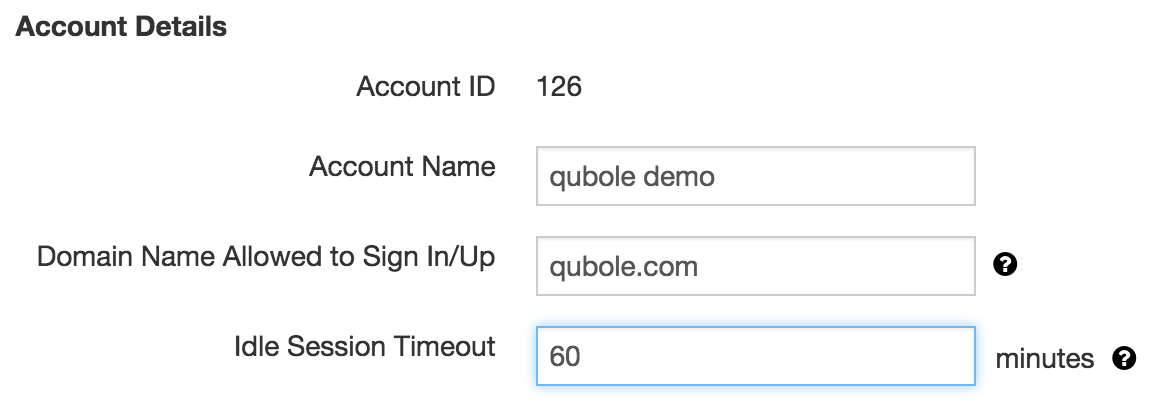

Finally, we’ve made two minor additions to help enforce compliance requirements for some of our customers. QDS now enforces HTTPS for all REST API requests. This is already enabled globally by default and non-HTTPS requests are now rejected.

We also added the ability to set a session timeout for each QDS account. This allows system-admins to set a custom timeout value, after which UI sessions will be automatically logged out. You can configure this by accessing Account Settings from within the Control Panel.

In Summary

We’re committed to keeping the highest standards around security, governance, and compliance in QDS. The additions that we’ve made have already been widely adopted by our customers, and we will continue to add more in the coming months.

If you’re interested in QDS, sign up today for a free trial!

To get an overview of QDS, click here to register for a live demo.